Over the last year, I’ve spent a good deal of time looking for ways to integrate many of the growing linked data services into MarcEdit. These services, mainly revolving around vocabularies, provide some interesting opportunities for augmenting our existing MARC data, or enhancing local systems that make use of these particular vocabularies. Examples like those at the Bentley (http://archival-integration.blogspot.com/2015/07/order-from-chaos-reconciling-local-data.html) are real-world demonstrations of how computers can take advantage of these endpoints when they are available.

In MarcEdit, I’ve been creating and testing linking tools for close to a year now, and one of the areas I’ve been waiting to explore is whether libraries can utilize linking services to build their own authorities workflows. Conceptually, it should be possible — the necessary information exists…it’s really just a matter of putting it together. So, that’s what I’ve been working on. Utilizing the linked data libraries found within MarcEdit, I’ve been working to create a service that will help users identify invalid headings and records where those headings reside.

Working Wireframes

Over the last week, I’ve prototyped this service. The way that it works is pretty straightforward. The tool extracts the data from the 1xx, 6xx, and 7xx fields, and if they are tagged as being LC controlled, I query the id.loc.gov service to see what information I can learn about the heading. Additionally, since this tool is designed for work in batch, there is a high likelihood that headings will repeat — so MarcEdit is generating a local cache of headings as well — this way it can check against the local cache rather than the remote cache when possible. The local cache will constantly be grown — with materials set to expire after a month. I’m still toying with what to do with the local cache, expirations, and what the best way to keep it in sync might be. I’d originally considered pulling down the entire LC names and subjects headings — but for a desktop application, this didn’t make sense. Together, these files, uncompressed, consumed GBs of data. Within an indexed database, this would continue to be true. And again, this file would need to be updated regularly. To, I’m looking for an approach that will give some local caching, without the need to make the user download and managed huge data files.

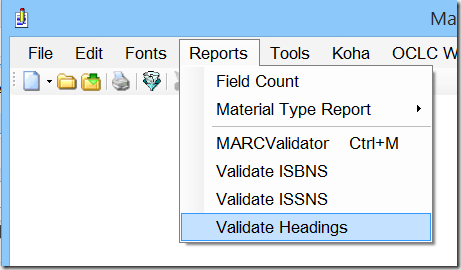

Anyway — the function is being implemented as a Report. Within the Reports menu in the MarcEditor, you will eventually find a new item titled Validate Headings.

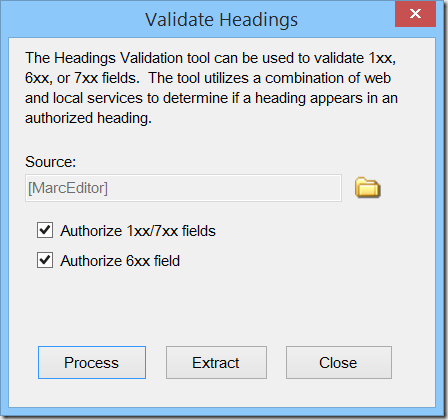

When you run the Validate Headings tool, you will see the following window:

You’ll notice that there is a Source file. If you come from the MarcEditor, this will be prepopulated. If you come from outside the MarcEditor, you will need to define the file that is being processed. Next, you select the elements to authorize. Then Click Process. The Extract button will initially be enabled until after the data run. Once completed, users can extract the records with invalid headings.

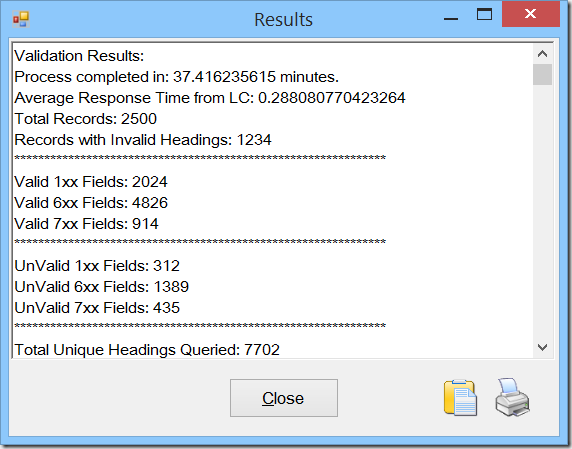

When completed, you will receive the following report:

This includes the total processing time, average response from LC’s id.loc.gov service, total number of records, and the information about how the data validated. Below, the report will give you information about headings that validated, but were variants. For example:

Record #846

Term in Record: Arnim, Bettina Brentano von, 1785-1859

LC Preferred Term: Arnim, Bettina von, 1785-1859

This would be marked as an invalid heading, because the data in the record is incorrect. But the reporting tool will provide back the Preferred LC label so the user can then see how the data should be currently structured. Actually, now that I’m thinking about it — I’ll likely include one more value — the URI to the dataset so you can actually go to the authority file page, from this report.

This report can be copied or printed — and as I noted, when this process is finished, the Extract button is enabled so the user can extract the data from the source records for processing.

Couple of Notes

So, this process takes time to run — there just isn’t any way around it. For this set, there were 7702 unique items queried. Each request from LC averaged 0.28 seconds. In my testing, depending on the time of day, I’ve found that response rate can run between 0.20 seconds per request to 1.2 seconds per response. None of those times are that bad when done individually, but when taken in aggregate against 7700 queries — it adds up. If you do the math, 7702*0.2 = 1540 seconds to just ask for the data. Divide that by 60 and you get 25.6 minutes. The total time to process that means that there are 11 minutes of “other” things happening here. My guess, that other 11 minutes is being eaten up by local lookups, character conversions (since LC request UTF8 and my data was in MARC8) and data normalization. Since there isn’t anything I can do about the latency between the user and the LC site — I’ll be working over the next week to try and remove as much local processing time from the equation as possible.

Questions — let me know.

–tr