The topic of charactersets is likely something most North American catalogers rarely give a second thought to. Our tools, systems — they all are built around a very anglo-centric world-view that assumes data is primarily structured in MARC21, and recorded in either MARC-8 or UTF8. However, when you get outside of North America, the question of characterset, and even MARC flavor for that matter, becomes much more relevant. While many programmers and catalogers that work with library data would like to believe that most data follows a fairly regular set of common rules and encodings — the reality is that it doesn’t. While MARC21 is the primary MARC encoding for North American and many European libraries — it is just one of around 40+ different flavors of MARC, and while MARC-8 and UTF-8 are the predominate charactersets in libraries coding in MARC21, move outside of North American and OCLC, and you will run into Big5, Cyrillic (codepage 1251), Central European (codepage 1250), ISO-5426, Arabic (codepage 1256), and a range of many other localized codepages in use today. So while UTF-8 and MARC-8 are the predominate encodings in countries using MARC21, a large portion of the international metadata community still relies on localized codepages when encoding their library metadata. And this can be a problem for any North American library looking to utilize metadata encoded in one of these local codepages, or share data with a library utilizing one of these local codepages.

For years, MarcEdit has included a number of tools for handling this soup of character encodings — tools that work at different levels to allow the tool to handle data from across the spectrum of different metadata rules, encodings, and markups. These get broken into two different types of processing algorithms.

Characterset Identification:

This algorithm is internal to MarcEdit and vital to how the tool handles data at a byte level. When working with file streams for rendering, the tool needs to decide if the data is in UTF-8 or something else (for mnemonic processing) — otherwise, data won’t render correctly in the graphical interface without first determining characterset for use when rendering. For a long time (and honestly, this is still true today), the byte in the LDR of a MARC21 record that indicates if a record is encoded in UTF-8 or something else, simply hasn’t been reliable. It’s getting better, but a good number of systems and tools simply forget (or ignore) this value. But more important for MarcEdit, this value is only useful for MARC21. This encoding byte is set in a different field/position within each different flavor of MARC. In order for MarcEdit to be able to handle this correctly, a small, fast algorithm needed to be created that could reliably identify UTF8 data at the binary level. And that’s what’s used — a heuristical algorthm that reads bytes to determine if the characterset might be in UTF-8 or something else.

Might be? Sadly, yes. There is no way to auto detect characterset. It just can’t happen. Each codepage reuses the same codepoints, they just assign different characters to those codepoints based on which encoding is in use. So, a tool won’t know how to display textual data without first knowing the set of codepointer rules that data was encoded under. It’s a real pain the backside.

To solve this problem, MarcEdit uses the following code in an identification function:

int x = 0;

int lLen = 0;

try

{

x = 0;

while (x < p.Length)

{

//System.Windows.Forms.MessageBox.Show(p[x].ToString());

if (p[x] <= 0x7F)

{

x++;

continue;

}

else if ((p[x] & 0xE0) == 0xC0)

{

lLen = 2;

}

else if ((p[x] & 0xF0) == 0xE0)

{

lLen = 3;

}

else if ((p[x] & 0xF8) == 0xF0)

{

lLen = 4;

}

else if ((p[x] & 0xFC) == 0xF8)

{

lLen = 5;

}

else if ((p[x] & 0xFE) == 0xFC)

{

lLen = 6;

}

else

{

return RET_VAL_ANSI;

}

while (lLen > 1)

{

x++;

if (x > p.Length || (p[x] & 0xC0) != 0x80)

{

return RET_VAL_ERR;

}

lLen--;

}

iEType = RET_VAL_UTF_8;

}

x++;

}

}

catch (System.Exception kk) {

iEType= RET_VAL_ERROR

}

return iEType;

This function allows the tool to quickly evaluate any data at a byte level and identify if that data might be UTF-8 or not. Which is really handy for my usage.

Character Conversion

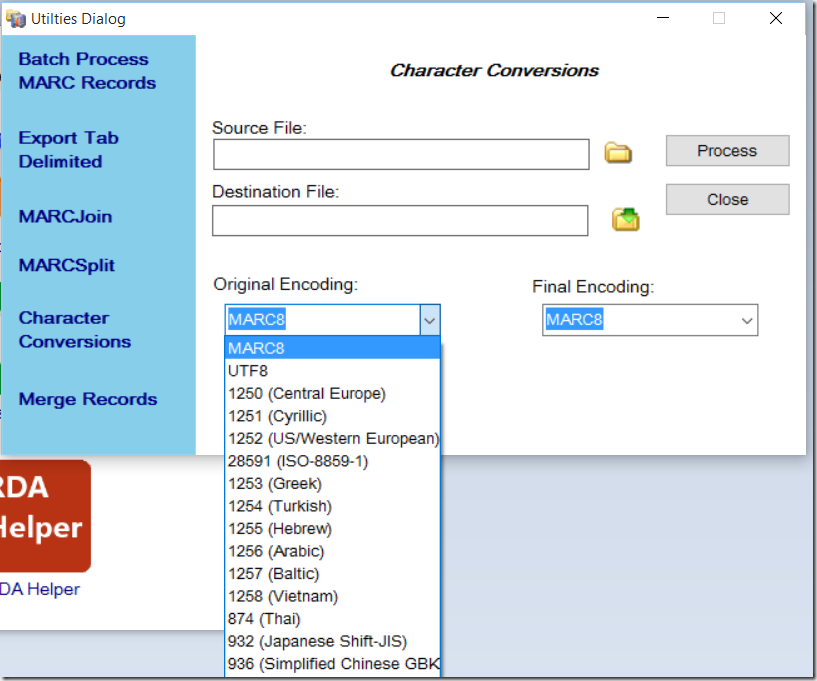

MarcEdit has also included a tool that allows users to convert data from one character encoding to another.

This tool requires users to identify the original characterset encoding for the file to be converted. Without that information, MarcEdit would have no idea which set of rules to apply when shifting the data around based on how characters have been assigned to their various codepoints. Unfortunately, a common problem that I hear from librarians, especially librarians in the United States that don’t have to deal with regularly this problem, is that they don’t know the file’s original characterset encoding, or how to find it. It’s a common problem — especially when retrieving data from some Eastern European publishers and Asian publishers. In many of these cases, users send me files, and based on my experience looking at different encodings, I can make a couple educated guesses and generally figure out how the data might be encoded.

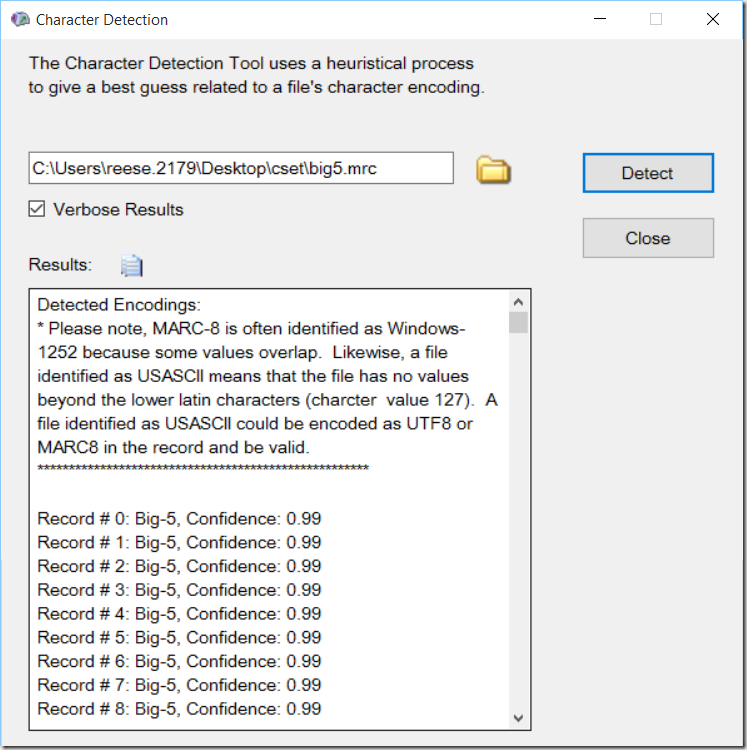

Automatic Character Detection

Obviously, it would be nice if MarcEdit could provide some kind of automatic characterset detection. The problem is that this is a process that is always fraught with errors. Since there is no way to definitively determine the characterset of a file or data simply by looking at the binary data — we are left having to guess. And this is where heuristics comes in again.

Current generation web browsers automatically set character encodings when rendering pages. This is something that they do based on the presence of metadata in the header, information from the server, and a heuristic analysis of the data prior to rendering. This is why everyone has seen pages that the browser believes is one character set, but is actually in another, making the data unreadable when it renders. However, the process that browsers are currently using, well, as sad as this may be, it’s the best we got currently.

And so, I’m going to be pulling this functionality into MarcEdit. Mozilla has made the algorithm that they use public, and some folks have ported that code into C#. The library can be found on git hub here: https://github.com/errepi/ude. I’ve tested it — it works pretty well, though is not even close to perfect. Unfortunately, this type of process works best when you have lots of data to evaluate — but most MARC records are just a few thousand bytes, which just isn’t enough data for a proper analysis. However, it does provide something — and maybe that something will provide a way for users working with data in an unknown character encodings to actually figure out how their data might be encoded.

The new character detection tools will be added to the next official update of MarcEdit (all versions).

And as I noted — this is a tool that will be added to give users one more tool to evaluating their records. While detection may still only be a best guess — its likely a pretty good guess.

The MARC8 problem

Of course, not all is candy and unicorns. MARC8, the lingua franca for a wide range of ILS systems and libraries — well, it complicates things. Unlike many of the localized codepages that are actually well defined standards and in use by a wide range of users and communities around the world — MARC-8 is not. MARC8 is essentially a made up encoding — it simply doesn’t exist outside of the small world of MARC21 libraries. To a heuristical parser evaluating character encoding, MARC-8 looks like one of four different characterset: USASCII, Codepage 1252, ISO-8899, and UTF8. The problem is that MARC-8, as an escape-base language, reuses parts of a couple different encodings. This really complicates the identification of MARC-8, especially in a world where other encodings may (probably) will be present. To that end, I’ve had to add a secondary set of heuristics that will evaluate data after detection so that if the data is identified as one of these four types, some additional evaluation is done looking specifically for MARC-8’s fingerprints. This allows, most of the time, for MARC8 data to be correctly identified, but again, not always. It just looks too much like other standard character encodings. Again, it’s a good reminder that this tool is just a best guess at the characterset encoding of a set of records — not a definitive answer.

Honestly, I know a lot of people would like to see MARC as a data structure retired. They write about it, talk about it, hope that BibFrame might actually do it. I get their point — MARC as a structure isn’t well suited for the way we process metadata today. Most programmers simply don’t work with formats like MARC, and fewer tools exist that make MARC easy to work with. Likewise, most evolving metadata models recognize that metadata lives within a larger context, and are taking advantage of semantic linking to encourage the linking of knowledge across communities. These are things libraries would like in their metadata models as well, and libraries will get there, though I think in baby steps. When you consider the train-wreck RDA adoption and development was for what we got out of it (at a practical level) — making a radical move like BibFrame will require a radical change (and maybe event that causes that change).

But I think that there is a bigger problem that needs more immediate action. The continued reliance on MARC8 actually posses a bigger threat to the long-term health of library metadata. MARC, as a structure, is easy to parse. MARC8, as a character encoding, is essentially a virus, one that we are continuing to let corrupt our data and lock it away from future generations. The sooner we can toss this encoding to the trash heap, the better it will be for everyone — especially since we are likely the passing of one generation away from losing the knowledge of how this made up character encoding actually works. And when that happens, it won’t matter how the record data is structured — because we won’t be able to read it anyway.

–tr

Comments

3 responses to “MarcEdit: Thinking about Charactersets and MARC”

The continued reliance on MARC8, especially when UTF-8 is right there, is mindnumbing. YBP only provides MARC21 in MARC8, but Alma only accepts data in UTF-8 and ISO-8899. You can fudge it by important MARC8 using ISO-8899, but that doesn’t seem like a particularly good idea. I presume Alma’s requirements are based on its basis in MARCXML rather than binary formats. Whatever the reason, it’s ridiculous that our vendors are using an arcane character encoding that isn’t compatible with a major ILS.

I would also like to point out that, of the myriad Character Sets documented in the IANA character sets, MARC8 is noticeably absent.

Importing MARC-8 as ISO-8899 is honestly a really problematic workaround. ISO-8899 and MARC8 aren’t even close to being compatible. The only reason it likely works is ISO-8899 defines the extended latin range (bytes 128-255) and MARC-8 only uses the character bytes between 0-255, but uses escape sequences to set and reset different character encodings. So, while diacritics (especially european) use codepoints that overlap, they don’t map to the same characters.

A great example — a lowercase scandinavian o /latin small letter o with a stroke is defined as 0xF8 in ISO-8899. However, in MARC8, this value is 0xB2. So, if you have a MARC8 record and load it using an ISO-8899 encoding stream, your data will be imported incorrectly.

In this case, Alma is the system that needs to be updated. Until MARC21 as a specification deprecates the characterset (and they should), Alma and every ILS system really should continue to support MARC-8. I would argue, however, that it’s time for the Library of Congress and the MAC committee to put this particular encoding to bed.

–TR

Yeah, I was rather skeptical when I was made aware of that decision. Apparently some tests were done, but I don’t know how heavily diacritical they were, and I doubt that any non-Latin characters were used.

I actually don’t think they mean ISO-8859-1. The only way you can select that option for import is if you’re using MARC21 binary format and ISO-8859-1 and, unless I’m mistaken, ISO-8859-1 isn’t an option for MARC21.