Now that MarcEdit 7 is available for alpha testers, I’ve been getting back some feedback on the new task processing. Some of this feedback relates to a couple of errors showing up in tasks that request user interaction…other feedback is related to the tasks themselves and continued performance improvements.

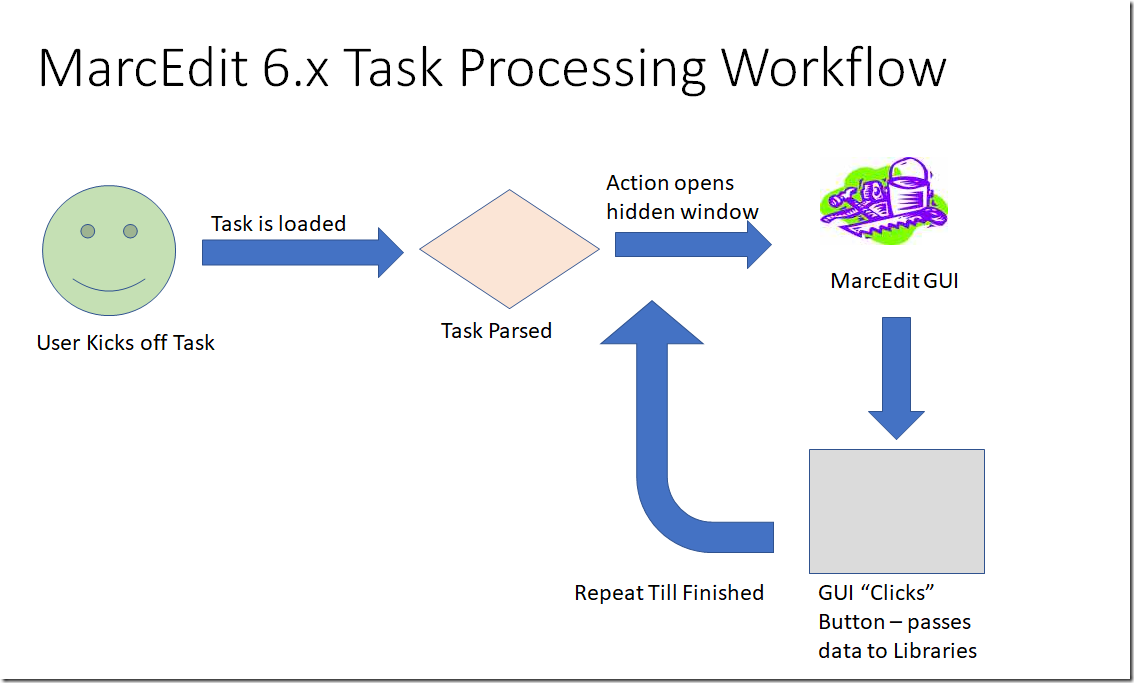

In this implementation, one of the areas that I am really focusing on is performance. To that end, I changed the way that tasks are processed. Previously, task processing looked very much like this:

A user would initiate a task via the GUI or command-line, and once the task was processed, the program would then, via the GUI, open a hidden window that would populate each of the “task” windows and then “click” the process button. Essentially, it was working much like a program that “sends” keystrokes to a window, but in a method that was a bit more automated.

This process had some pros and cons. On the plus-side, Tasks was something added to MarcEdit 6.x, so this allowed me to easily add the task processing functionality without tearing the program apart. That was a major win, as tasks then were just a simple matter of processing the commands and filling a hidden form for the user. On the con-side, the task processing had a number of hidden performance penalties. While tasks automated processing (which allowed for improved workflows), each task processed the file separately, and after each process, the file would be reloaded into the MarcEditor. Say you had a file that took 10 seconds to load and a task list with 6 tasks. The file loading alone, would cost you a minute. Now, consider if that same file had to be processed by a task list with 60 different task elements — that would be 10 minutes dedicated just to file loading and unloading; and doesn’t count the time to actually process the data.

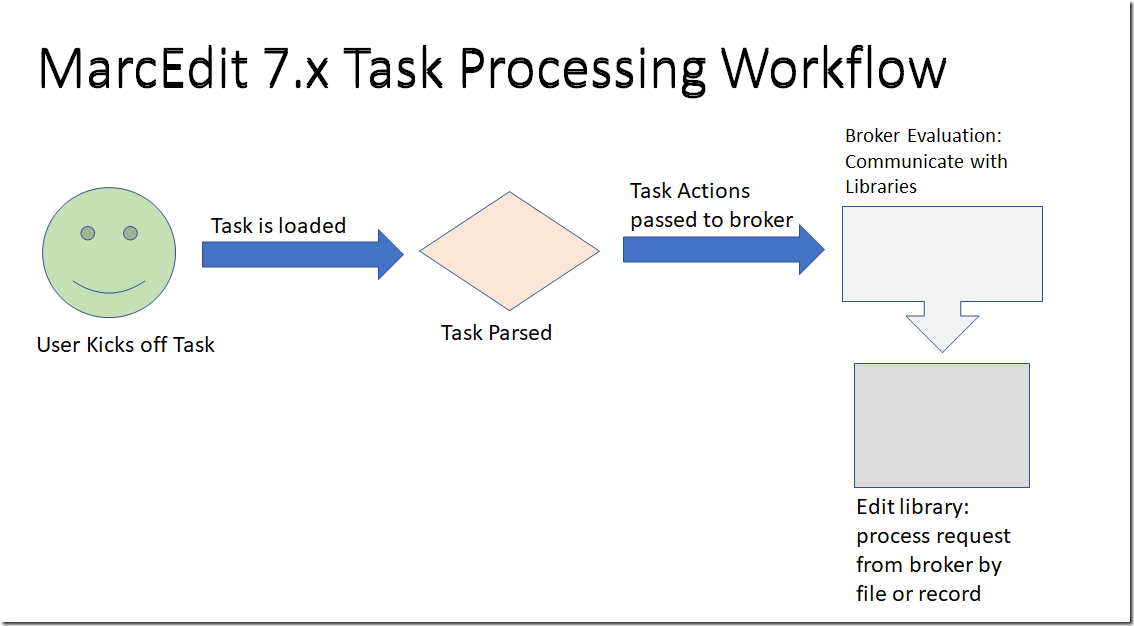

This was a problem, so with MarcEdit 7, I took the opportunity to actually tear down the way that tasks work. This meant divorcing the application from the task process and creating a broker that could evaluate tasks being passed to it, and manage the various aspects of task processing. This has led to the development of a process model that looks more like this now:

Once a task is initiated and it has been parsed, the task operations are passed to a broker. The broker then looks at the task elements and the file to be processed, and then negotiates those actions directly with the program libraries. This removes any file loading penalties, and allows me to manage memory and temporary file use at a much more granular way. It also immediately speeds up the process. Take that file that takes 10 seconds to load and 60 tasks to complete. Immediately, you improve processing time by 10 minutes. But the question still arises, could I do more?

And the answer to this question is actually yes. The broker has the ability to process tasks in a number of different ways. One of these is by handling each task process one by one at a file level, the other is handling all tasks all at once, but at a record level. You might think that record level processing would always be faster, but it’s not. Consider the task list with 60 tasks. Some of these elements may only apply to a small subset of records. In the by file process, I can quickly shortcut processing of records that are out of scope, in a record by record approach, I actually have to evaluate the record. So, in testing, I found that when records are smaller than a certain size, and the number of task actions to process was within a certain number (regardless of file size), it was almost always better to process the data by file. Where this changes is when you have a larger task list. How large, I’m trying to figure that out. But as an example, I had a real-world example sent to me that has over 950 task actions to process on a file ~350 MB (344,000 records) in size. While the by file process is significantly faster than the MarcEdit 6.x method (each process incurred a 17 second file load penalty) — this still takes a lot of time to process because you are doing 950+ actions and complete file reads. While this type of processing might not be particularly common (I do believe this is getting into outlier territory), the process can help to illustrate what I’m trying to teach the broker to do. I ran this file using the three different processing methodologies, and here’s the results:

- MarcEdit 6.3.x: 962 Task Actions, completing in ~7 hours

- By File: 962 Task Actions, completing in 3 hours, 12 minutes

- By Record: 962 Task Actions, completing in 2 hours and 20 minutes

So, that’s still a really, really long time, but taking a closer look at the file and the changes made, and you can start to see why this process takes so much time. Looking at the results file, >10 million changes have been processed against the 340,000+ records. Also, consider the number of iterations that must take place. The average record has approximately 20 fields. Since each task needs to act upon the results of the task before it, it’s impossible to have tasks process at the same time — rather, tasks must happen in succession. This means that each task must process the entire record as the results of a task may require an action based on data changed anywhere in the record. This means that for one record, the program needs to run 962 operations, which means looping through 19, 240 fields (assuming no fields are added or deleted). Extrapolate that number for 340,000 records, and the program needs to evaluate 6,541,600,000 fields or over 6 billion field evaluations which works out to 49,557,575 field evaluations per minute.

Ideally, I’d love to see the processing time for this task/file pair to be down around 1 hour and 30 minutes. That would cut the current MarcEdit 7 processing time in half, and be almost 5 hours and 30 minutes faster than the current MarcEdit 6.3.x processing. Can I get the processing down to that number — I’m not sure. There are still optimizations to be hand — loops that can be optimized, buffering, etc. — but I think the biggest potential speed gains may possibly be available by adding some pre-processing to a task process to do a cursory evaluation of a recordset if a set of find criteria is present. This wouldn’t affect every task, but potentially could improve selective processing of Edit Indicator, Edit Field, Edit Subfield, and Add/Delete field functions. This is likely the next area that I’ll be evaluating.

Of course, the other question to solve is what exactly is the tipping point when By File Processing becomes less efficient than By Record processing. My guess is that the characteristics that will be most applicable in this decision will be the number of task actions needing to be processed. Splitting this file for example, into a file of 1000 and running this task by record versus by file — we see the following:

- By File processing, 962 Task Actions, completed in: 0.69 minutes

- By Record Processing, 962 Task Actions, completed in: 0.36 minutes

The processing times are relatively close, but the By Record processing is twice as fast as the By File Processing. If we reduced the number of tasks to under 20, there is a dramatic switch in the processing time and By File Processing is the clear winner.

Obviously, there is some additional work to be done here, and more testing to do to understand what characteristics and which processing style will lead to the greatest processing gains, but from this testing, I came away with a couple pieces of information. First, the MarcEdit 7 process, regardless of method used, is way faster than MarcEdit 6.3.x. Second, the MarcEdit 7 process and the MarcEdit 6.3.x process suffered from a flaw related to temp file management. You can’t see it unless you work with files this large and with this many tasks, but the program cleans up temporary files after all processing is complete. Normally, in a single operation environment, that happens right away. Since a task represents a single operation, ~962 temporary files at 350 MBs per file were created as part of both processes. That’s 336, 700 MB of data or 336 GBs of Temporary data! When you close the program, that data is all cleared, but again, Ouch. As I say, normally, you’d never see this kind of problem, but in this kind of edge case, it shows up clearly. This has led me to implement periodic temp file cleanup so that no more than 10 temporary files are stored at any given time. While that still means that in the case of this test file, up to 3 GB of temporary data could be stored, the size of that temp cache would never grow larger. This seems to be a big win, and something I would have never seen without working with this kind of data file and use case.

Finally, let’s say after all this work, I’m able to hit the best case benchmarks (1 hr. 30 min.) and a user still feels that this is too long. What more could be done? Honestly, I’ve been thinking about that…but really, very little. There will be a performance ceiling given how MarcEdit has to process task data. So for those users — if this kind of performance time wasn’t acceptable, I believe only a custom built solution would provide better performance — but even with a custom build, I doubt you’d see significant gains if one continued to require tasks to be processed in sequence.

Anyway — this is maybe a bit more of a deeper dive into how tasks work in MarcEdit 6.3.x and how they will work in MarcEdit 7 than anyone really was looking for — but this particular set of files and use case represented and interesting opportunity to really test the various methods and provide benchmarks that easily demonstrate the impact of the current task process changes.

If you have questions, feel free to let me know.

–tr

Comments

One response to “MarcEdit 7: Continued Task Refinements”

[…] Last week, I discussed some of the work I was doing to continue to evaluate how Task processing will work in MarcEdit 7. To do some of this work, I’ve been working with a set of outlier data who’s performance in MarcEdit 6.3 left much to be desired. You can read about the testing and the file set here: MarcEdit 7: Continued Task Refinements […]